I recently conducted an “Agentic AI 101” course at Persistent Systems.

I started with the fundamentals (Agent, Tool, RAG, etc). Then I moved into hands-on implementations using 3 flavors to satisfy the mixed crowd – Langflow, Amazon Bedrock Agents, and finally LangGraph. The tricky part wasn’t teaching the content. It was deciding on a good subjective assessment.

Most ideas I came up with fell into one of two buckets:

- Too simplistic -> low value, little to no learning

- Too complex -> over-engineered setup, everyone gets stuck on plumbing

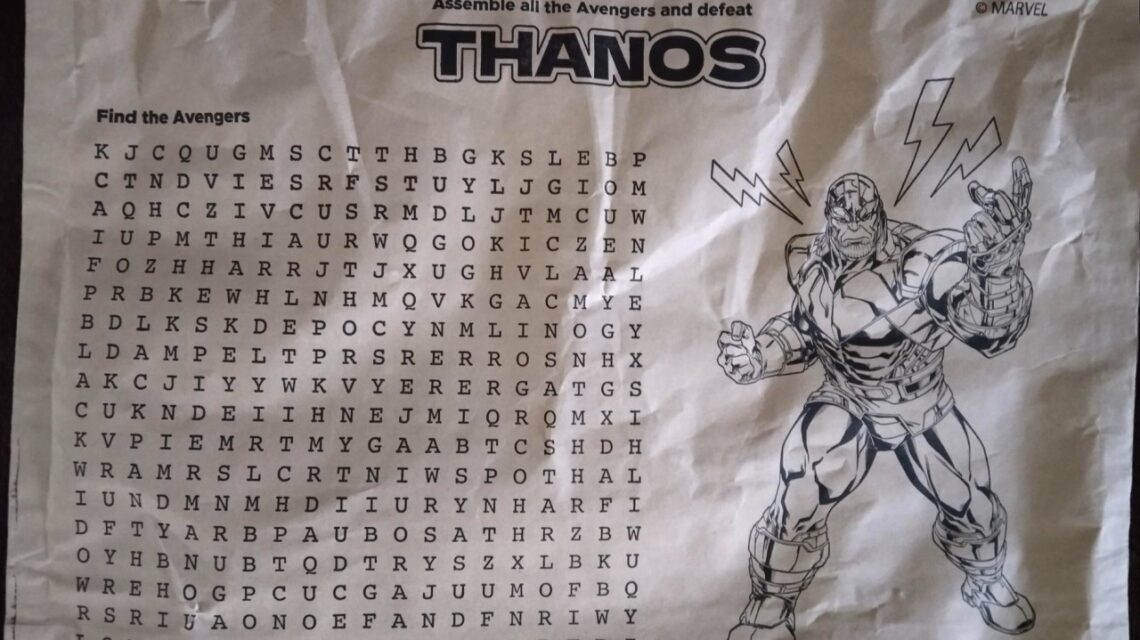

I discarded idea after idea… until Blinkit arrived with a Goldilocks-style perfect solution (or should I say problem). The delivery guy handed me my groceries in a brown paper bag that had an Avengers-themed word search puzzle printed on it. On a whim, I snapped a photo and tried to “solve” the puzzle using ChatGPT and Claude.

Both hallucinated. Perfect!

That became the assessment problem.

In the next live session, I walked the class through my basic steps of Agentic AI solutioning:

- First convert a vague 1-liner problem statement into a clear User–System interaction dialogue.

- Then identify the Agents and create a proper Agent Specification document based on the interaction dialogue.

You can read up more on my process in detail here.

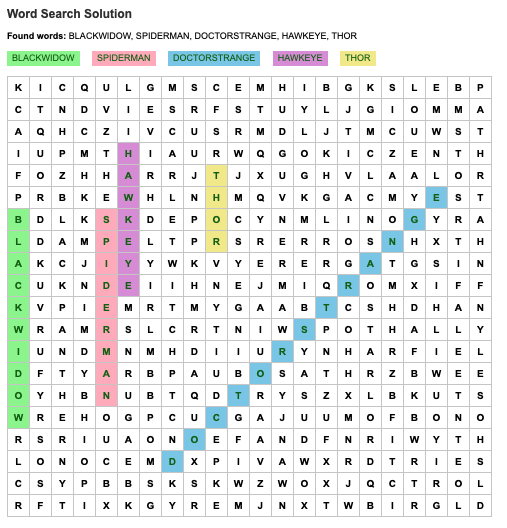

Once that was done, I handed the spec to a GenAI coding assistant (I think I used Amazon Q) and asked it to generate the implementation. The overall implementation was good but it had some gaps. I filled in those gaps manually “by hand”. And just like that – a working solution in LangGraph.

Below is a screenshot of the visualized HTML solution created by my LangGraph code for a manually given set of search words.

Learning Agentic AI need not be boring theory. Have fun while at it. Keep practicing, you will master it. It is inevitable!